Cilium: Empowering Kubernetes Networking and Security with BPF

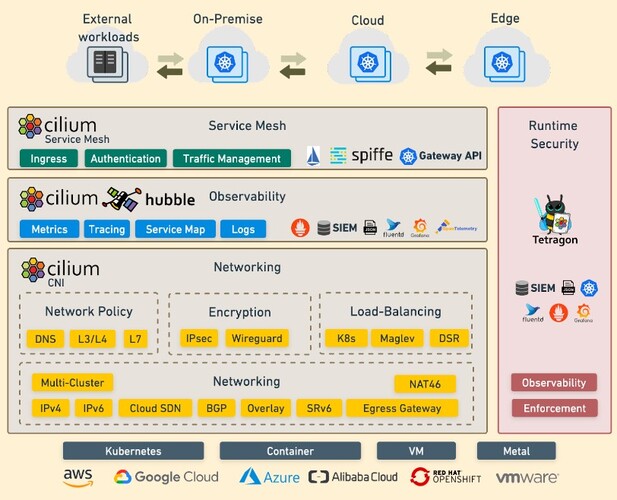

Using BPF as an underlying engine, Cilium creates a networking stack precisely optimised for running API-driven microservices on platforms like Kubernetes. We’ll focus on two key benefits of using Cilium:

Moving beyond a simple focus on packets, IP addresses, and ports, and instead treating service identity and API protocols (e.g., HTTP, gRPC, and Kafka) as first-class citizens in the platform.

Optimising Linux network forwarding, visibility, and filtering for the scale, dynamism and deployment patterns (e.g service mesh proxies) that are increasingly common in microservices environments.

Layer7 Network Policies example

Allow all HTTP requests with method GET and path /public/.*. Deny all other requests.

Require the HTTP header X-Token: [0-9]+ to be present in all REST calls.

A typical microservices endpoint will expose 10s or even 100s of unique RPC calls:

A RESTful HTTP-based service often exposes POST/PUT/GET/DELETE for many different resource types, each represented by a URL prefix.

A Kafka broker will often have many different topics, and allow actions like produce and consume on each topic to different clients.

A datastore like Cassandra, Elasticsearch, Mongodb, Redis, and even Mysql and Postgres provides both read and write access to many distinct tables/indices.

As a result, port-based visibility and security is blind to the individual RPC calls and will expose either all RPC between two different services or none at all.As a result, port-based visibility and security is blind to the individual RPC calls and will expose either all RPC between two different services or none at all.

With Cilium, identity is extracted from the container orchestrator and embedded in each network request (e.g., id=app1). Unlike an IP address, such an identity is consistent across multiple replicas implementing a service, and consistent across time. Furthermore, Cilium provides API-aware visibility and security that understands HTTP Methods/URL, gRPC service methods, Kafka topics, etc. and enables fine-grained visibility and security at the granularity of the RPCs between microservices.

This leads to a visibility and security that is both radically simpler (based on consistent identities, not ephemeral IP addresses) and more powerful (visibility into individual RPC calls). To see this below is an example of using Cilium Microscope to view all HTTP requests to a microservice “app1” and log whether the request was allowed or denied by security policy:

This leads to a visibility and security that is both radically simpler (based on consistent identities, not ephemeral IP addresses) and more powerful (visibility into individual RPC calls). To see this below is an example of using Cilium Microscope to view all HTTP requests to a microservice “app1” and log whether the request was allowed or denied by security policy:

cilium-microscope --to-selector id=app1 --type l7

([k8s:id=app2]) => ([k8s:id=app1]) http GET app1-service/public Forwarded

([k8s:id=app3]) => ([k8s:id=app1]) http GET app1-service/private Denied

...

With Cilium, there is no need to worry about how many container replicas are implementing service ‘app1’, what hosts these containers are running on, or what IP addresses the containers were assigned at any point in time.

The aforementioned KubeCon Talk performed specific measurements on iptables as a bottleneck for Kubernetes service forwarding and noted that throughput degraded by ~30% with 5,000 services deployed, and by 80% with 10,000 services (a 6X performance difference).Likewise, rule updates at 5,000 services took 11 minutes, ages in a world of continuous delivery.

Thanks to the flexibility of BPF, Cilium performs this same operation with O(1) average runtime behaviour using a simple BPF map based hash table, meaning the lookup latency at 10,000 or even 20,000 services is constant. Likewise, updates to these BPF maps from userspace are highly-efficient, meaning that even with 20,000+ services, the time to update a forwarding rule is microseconds, not hours.

An emerging deployment pattern for microservices is to use a local proxy (running either as a per-pod “sidecar proxy” or one proxy per Linux host) to implement a “service mesh” between a collection of microservices (for example, Istio uses Envoy in a sidecar model).

This approach requires all communication to/from a workload container to be redirected to this local proxy. This redirection must be transparent (i.e., no application changes) and secure (i.e., cannot be bypassed by the application). Istio currently performs this redirection at the packet level using iptables. But doing this redirect at the packet level means each byte of data passes through the entire TCP/IP stack, with Linux performing TCP congestion control and ACKing, breaking data into IP packets, and in some cases even passing it through a virtual ethernet device.

The cost in terms of buffer memory, CPU cycles, context switches, and packet latency is quite high and all to effectively copy data from one Linux socket to another on the same host